The Spring of 1988

In the spring of 1988, Alan Cooper sat in front of a computer in a large boardroom at the Microsoft headquarters in Redmond, Washington, patiently waiting for Bill Gates to arrive.

At the time, Cooper's main business was writing desktop application software to sell to publishers. “I was one of the first companies to realize that you could retail software without needing to sell a computer,” he recalls. But for the past month, Cooper had been frantically coding in preparation for this Microsoft demo, adding last-minute features to Tripod, a shell construction set for the Windows operating system that he'd been working on as a side project.

In late 1985 or early 1986, a friend had brought Cooper to Microsoft's annual technical conference in Silicon Valley. On stage was Steve Ballmer, presenting the first version of Windows. Cooper was impressed, not by the graphical multitasking system—something he'd already written himself—but by Microsoft's dynamic-link libraries, or DLLs. In Windows, much of the operating system's functionality was provided by DLLs, a new concept of shared libraries with code and data that could be used by more than one program at the same time.

“Might as well have had a neon sign saying, ‘Market Opportunity.' And it just really intrigued me. So I started saying, ‘Okay, I'm going to build a shell.'”

“There were some things that I couldn't do because I didn't have access to the deep guts of the operating system. And there were things that I wanted, which were interprocess communication, dynamic relocation, and [...] dynamic loading of modules that could run and go out without shutting down the operating system. So I went home and I put my little graphic frontend and multitasking dispatcher in the sock drawer and started building software in Windows,” Cooper explains.

In Cooper's eyes, though, Windows had one major drawback. The shell—the graphical face of the operating system where you started programs and looked for files—was rudimentary, lacking overlapping windows and visual polish. Compared to Apple's Macintosh GUI released almost two years earlier, it was clearly an aspect of the project into which Microsoft just hadn't put much effort. “It was a program called MSDOS.exe and it was very clear that somebody had written it in a weekend,” Cooper observes. “[Microsoft] might as well have had a neon sign saying, ‘Market Opportunity.' And it just really intrigued me. So I started saying, ‘Okay, I'm going to build a shell.'”

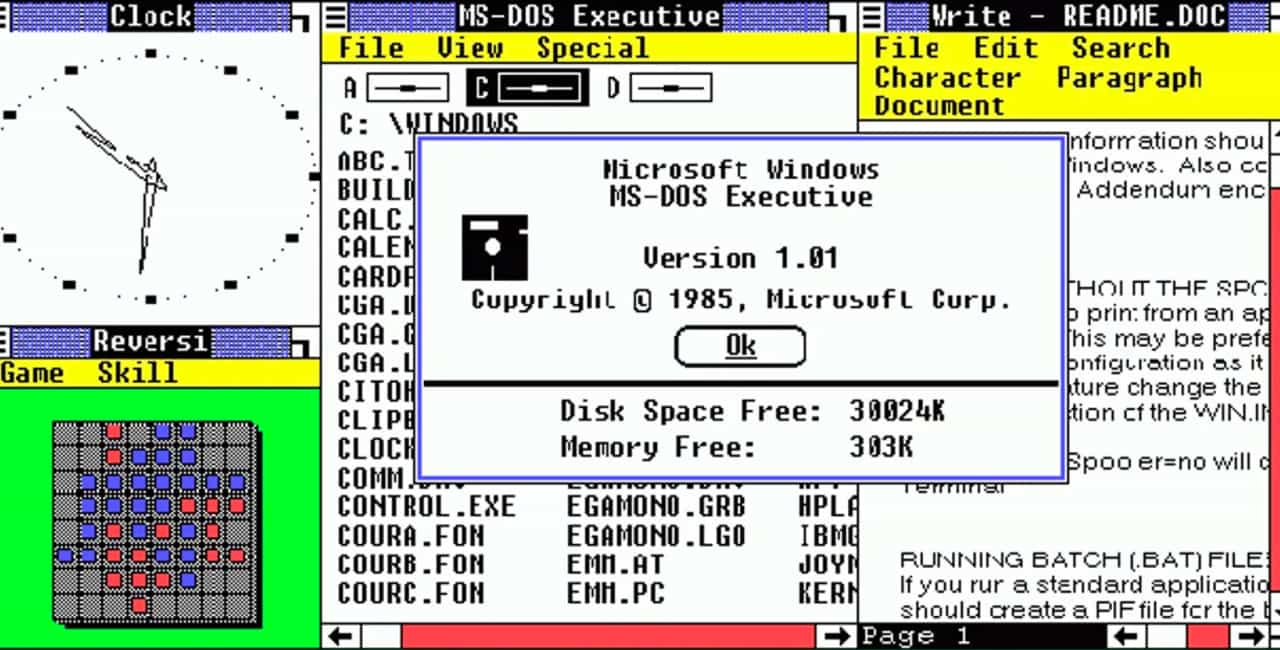

Windows 1.0's 16-bit shell ran on top of MS-DOS

A program run from the command line called MS-DOS Executive (the equivalent of the modern day Explorer or Finder). Windows came with a few built-in programs—like a notepad, calculator, and clock—that could be launched into a non-overlapping application window by clicking the .exe file or an associate file, like a .doc.

Video player

Ken Doe gives a detailed ten minute demo of the Windows 1.0 shell, 30 years after its release.

A (not so) simple shell construction kit

“I started writing little programs that could be shells for Windows,” Cooper remembers. “But that's actually a hard problem! You know, what would a shell be for Windows? It's an operating system that serves a lot of people.”

Cooper's solution to this problem didn't click until late 1987, when a friend at Microsoft brought him along on a sales call with an IT manager at Bank of America. The manager explained that he needed Windows to be usable by all of the bank's employees: highly technical systems administrators, semi-technical analysts, and even users entirely unfamiliar with computers, like tellers. Cooper recalls the moment of inspiration:

In an instant, I perceived the solution to the shell design problem: it would be a shell construction set—a tool where each user would be able to construct exactly the shell that they needed for their unique mix of applications and training. Instead of me telling the users what the ideal shell was, they could design their own, personalized ideal shell.

Cooper began working on this new idea in earnest. His prototype had a palette of controls, such as buttons and listboxes, that users could drag and drop onto the screen, populating “forms.” Some of these controls were preconfigured for common shell functionality, like a listbox that automatically showed the contents of a directory. Michael Geary, one of the programmers that Cooper eventually hired to help with Tripod development, describes naming these elements:

“They weren't called ‘controls' originally. Alan was going to call them ‘waldos', named after remote manipulator arms. I couldn't make sense out of that name, so I called them ‘gizmos'. Microsoft must have thought this name was too frivolous, so they renamed them ‘controls'.”

The “gizmos” would be a large part of what made Tripod groundbreaking: it wasn't so much what the resulting shells could do, but the interaction details which had never been well-implemented on a PC before. Cooper built drag-and-drop protocols and a sprite animation system from scratch.

Tripod also used an event-driven model: when the user performed an action like clicking a button, it would trigger specific code to execute. To connect events fired by one gizmo to actions taken on another, users would drag out an arrow between the gizmos. Geary remembers this as the origin for the programming phrase “fire an event”:

I was looking for a name for one gizmo sending a message to another. I knew that SQL had ‘triggers,' and Windows had SendMessage but I didn't like those names.I got frustrated one night and started firing rubber bands at my screen to help me think. It was a habit I had back then to shake up my thinking. Probably more practical on a tough glass CRT than on a modern flat screen! After firing a few rubber bands, I was still stuck. So I fired up a doobie to see if that would help. As I flicked my lighter and looked at the fire, it all came together. Fire a rubber band. Fire up a doobie. Fire an event!

“It blew his mind, he had never seen anything like it ... at one point he turned to his retinue and asked ‘Why can't we do stuff like this?'”

“Why can't we do stuff like this?”

Once Cooper had a working prototype, he shopped it to publishers around the Valley. Everyone he spoke to told him the same thing: show it to Microsoft, we don't want any part of competing with them on this.

Cooper had met Bill Gates years back in the early days of Microsoft, but they were hardly on speed dial. Cooper's friend at Microsoft managed to arrange an audience with Gabe Newell, then a mid-level executive who worked on Windows. (Newell went on to become co-founder and CEO of the video game company Valve.)

When they finally met, Newell abruptly stopped Cooper five minutes into his demo: “Bill's got to see this.” He sent Cooper home and arranged for him to come back up to Redmond in a month to meet with Gates directly. Cooper spent the time furiously coding more features into Tripod.

A month later (back to that spring of 1988), Cooper sat in that large Microsoft boardroom, but this time with Gates and an entourage of a dozen Microsoft employees. Cooper ran through the demo.

“It blew his mind, he had never seen anything like it,” Cooper remembers of Gates's reaction, “at one point he turned to his retinue and asked ‘Why can't we do stuff like this?'”

When one of the Microsoft employees pointed out some problems that the tool didn't address, Gates himself lept to Tripod's defense.

“At that point I knew that something was going to happen,” Cooper says.

Something did happen, although not exactly what he expected. There was no way he could know, sitting in that boardroom, that his project would eventually become Visual Basic, a visual programming environment that would reign for a decade and become the gateway to application programming for countless users. All he knew was that he had built something cool, something that no one had done before—not even the hotshots at Microsoft.

From Tripod to Ruby

Gates wanted Tripod. The parties hammered out a deal over the next few months. Cooper would finish the project and ensure it passed through Microsoft's rigorous formal QA process, at which point it would be bundled in the upcoming Windows 3.0.

With contract in hand, Cooper wrote a detailed spec and hired a team of programmers—Frank Raab, Michael Geary, Gary Kratkin, and Mark Merker. Cooper decided to promptly throw away the 25,000 lines of messy prototype C code that comprised Tripod and start over from scratch, feeling like it was so irredeemably full of time-pressured hacks, it'd simply be easier to rewrite it with a cleaner design. Russell Werner, Microsoft's GM of the DOS and Windows business unit, was not pleased.

“When Russ found out I had discarded [the code], he freaked out,” Cooper recalls.

“He started shouting at me, saying that I would miss our deadlines, I would derail the entire project, and that I would delay the shipment of the whole Windows 3.0 product. Having presented him with a fait accompli, there was little he could do except predict failure.” The team dove into the rewrite—now codenamed “Ruby” to distinguish it from the prototype version.

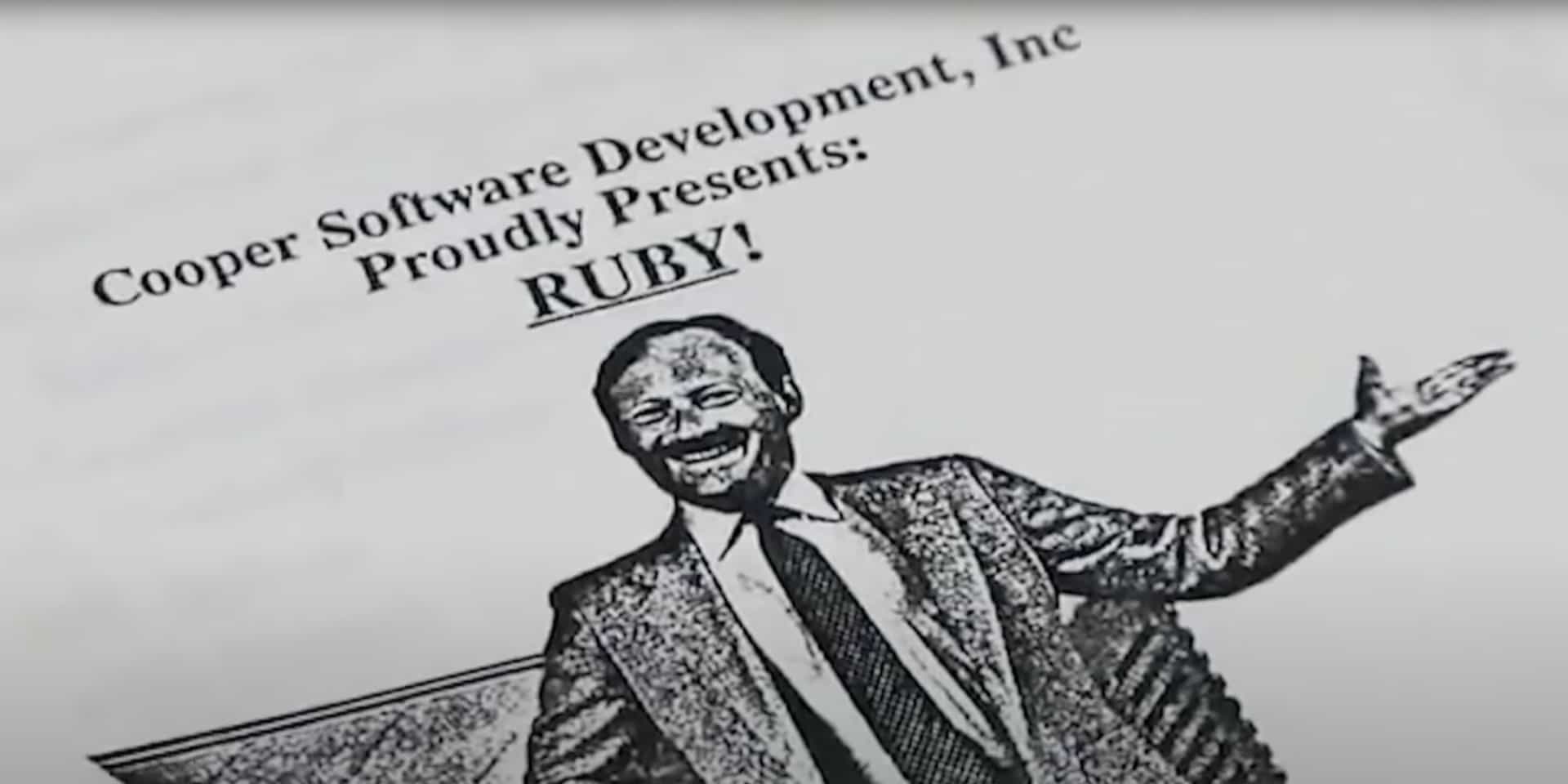

The Ruby Team in spring of 1989

From left to right: Frank Raab, Mike Geary, Alan Cooper, Gary Kratkin and Mark Merker.

A major change was architecting the gizmo palette to load in dynamically (using Windows' new DLL concept), with an API for third-party developers to create and distribute their own gizmos. Cooper describes the design decision:

I envisioned a product where third-party vendors could write their own gizmo DLLs and users could add them to the product in the field without needing to recompile. We defined an interface whereby Ruby would interrogate nearby executable files with a query message. If the file responded appropriately, Ruby knew that it was a cooperating gizmo and proceeded to request its icon to display in the tool palette.

A 18 months after starting work, in early 1990, Cooper’s team turned over the golden master to Microsoft right on time. But Windows 3.0, it turns out, would ship eight months late anyway—and not include Ruby at all.

18 months after starting work

In early 1990, Cooper's team turned over the golden master to Microsoft right on time. But Windows 3.0, it turns out, would ship eight months late anyway—and not include Ruby at all.

From Ruby to Thunder

Officially, Ruby wasn't included as the default Windows 3.0 shell because it wasn't keystroke-for keystroke, pixel-for-pixel, identical to the OS/2 shell. The more likely reason, though, was that Ruby was political collateral damage inside of Microsoft. Few remember that Microsoft was at this time simultaneously developing Windows and jointly developing the OS/2 operating system with IBM. Tensions between the teams—OS/2 was originally considered the more strategic product and Windows the underdog—boiled over with Ruby as a proxy fight. Cooper suspects that the root issue was professional jealousy, as some of the engineers on the Windows team had been present at the 1988 demo when Gates had been ever so effusive about Tripod. “He was making all those guys hate me,” Cooper suggests, “because I showed them up, really badly.”

Whatever the cause, Ruby was now orphaned inside of Microsoft less than a year after it was delivered. Frustrated, Cooper flew to Redmond, met with Bill Gates, and offered to buy the software back. “I said, ‘I'll release it myself, as a shell construction set for Windows'.”

Gates refused.

Cooper had no leverage, and Gates, according to Cooper, figured he could keep Ruby around and do something with it.

And indeed he did. Gates loved BASIC—the programming language upon which Microsoft was founded—and believed that, with graphical user interfaces starting to define desktop computing, BASIC needed a visual component. Perhaps inspired by Apple's HyperCard, he came up with the idea of taking Ruby's visual programming frontend and replacing its small custom internal language with BASIC, effectively creating a visual programming language for developers.

In a 1989 Byte article celebrating the 25th birthday of BASIC, Gates hinted at what he'd directed the team to work on:

After all, the best way to design a form is to draw the form, not write code to reproduce it. With a mouse and a palette of pre-dawn graphics images, you should be able to combine lines, boxes, and buttons interactively on a screen to design a form for a program. That kind of interactive design of objects should also let you attach to or combine your creations within a program.

The Business Languages Group at Microsoft was tasked with making Gates's vision a reality. This was not received enthusiastically. The group was already stretched thin, charged with maintaining Microsoft's QuickBASIC IDE, the BASIC compiler, and developing a new language engine (dubbed Embedded Basic) for inclusion in a relational database product codenamed Omega (which would eventually become Microsoft Access).

The Microsoft Business Language Group in 1990

(Image credit: Scott Ferguson)

To pacify Gates, the unit staffed the project with a team of young, first-time leads, including Scott Ferguson, who was appointed Visual Basic's development lead and architect. Codenaming the project “Thunder,” the rookie team leaned hard on Michael Geary from Cooper's team to ease the transition.

“While we were experts in the intricacies of Windows UI development by the end of the project, we had only rudimentary skills at the start. [We had Michael] to familiarize us with the internals of Ruby and teach us the ‘weirding way' of coercing the Windows USER APIs to make things happen that most Windows developers never cared about or experienced but were stressed to the breaking point by VB,” recalls Ferguson.

The Visual Basic team leads at Microsoft right after Visual Basic 1.0 shipped

According to Ferguson, the photographer thought he needed more people to balance out the composition, so he brought in two of his assistants to act as “filler”.

(Image credit: Scott Ferguson)

Originally intended to be a quick 6-month project, the complexity of translating Ruby's shell construction kit into a full-fledged programming environment resulted in 18 laborious months of development.

The first breaking change was excising Ruby's “arrows between gizmos” graph for passing messages between controls.

The team considered simply replacing the crude string language with BASIC, but while a visual graph may have worked well for the simpler needs of a shell construction kit, it wouldn't scale for a general programming language. They ultimately decided to borrow Omega's Embedded Basic code editor and event model, a process Ferguson recounts as being “roughly equivalent to reaching into a monkey's brain and pulling out only the mushy bits relating to vision.”

The team settled on a final architecture for Thunder composed of three elements: the Embedded Basic language engine, a forms engine (based on the Ruby code from Cooper's team), and the shell—UI code both ported over from the Omega project and written from scratch.

By the time the project was ready to ship, little of Ruby's code remained. Ferguson recalls being asked if Ruby accounted for more than 15% of the product code, the contractual criterion for including attribution to Cooper's team:

Given that the forms engine was roughly a third of the product and the recognizable parts of Ruby remaining there were surely less than half of that, I might have said ‘no.' But I offered that rounding up seemed fair, given that VB would likely not have happened at all if it were not for Ruby being thrust upon us.

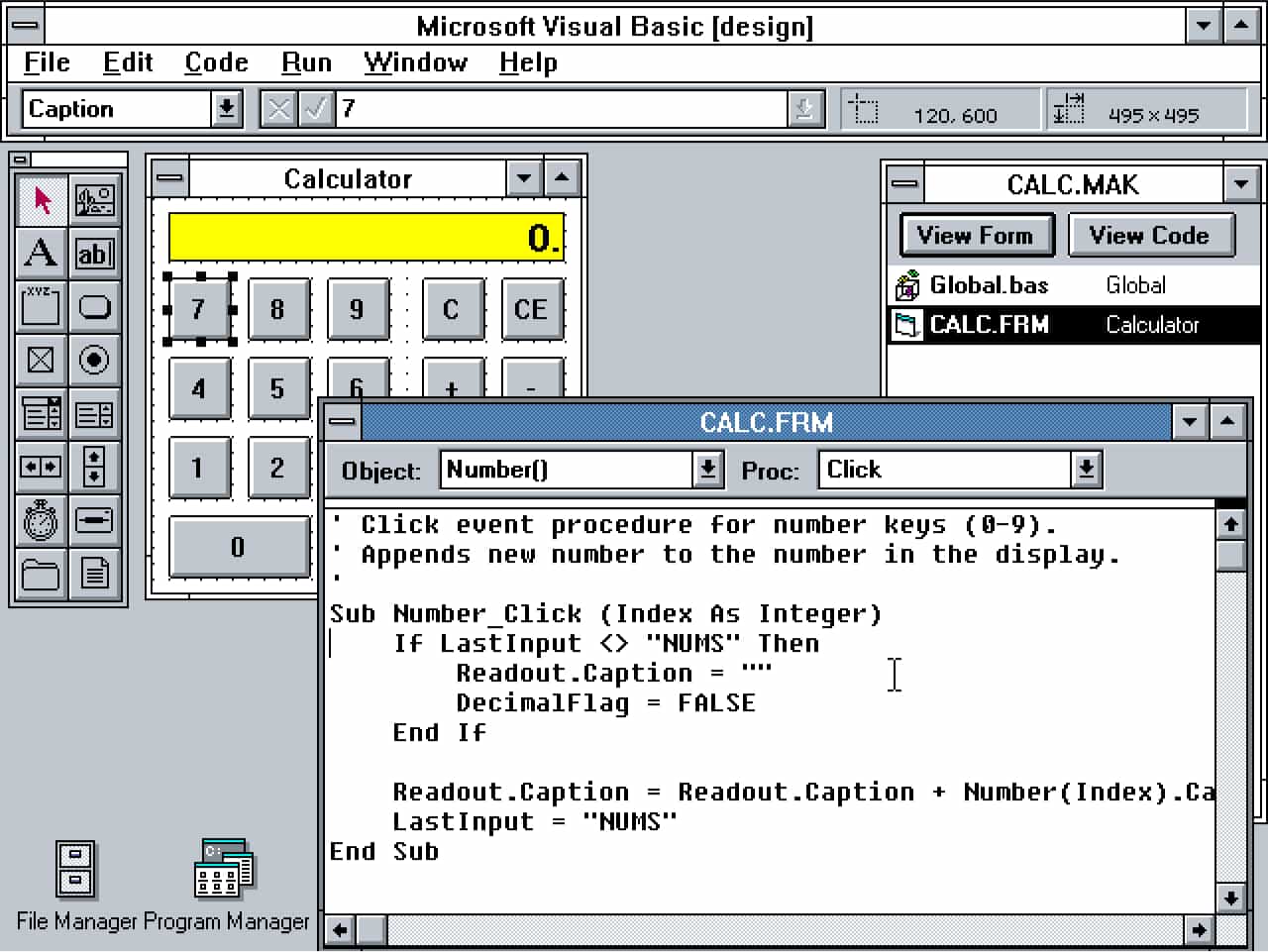

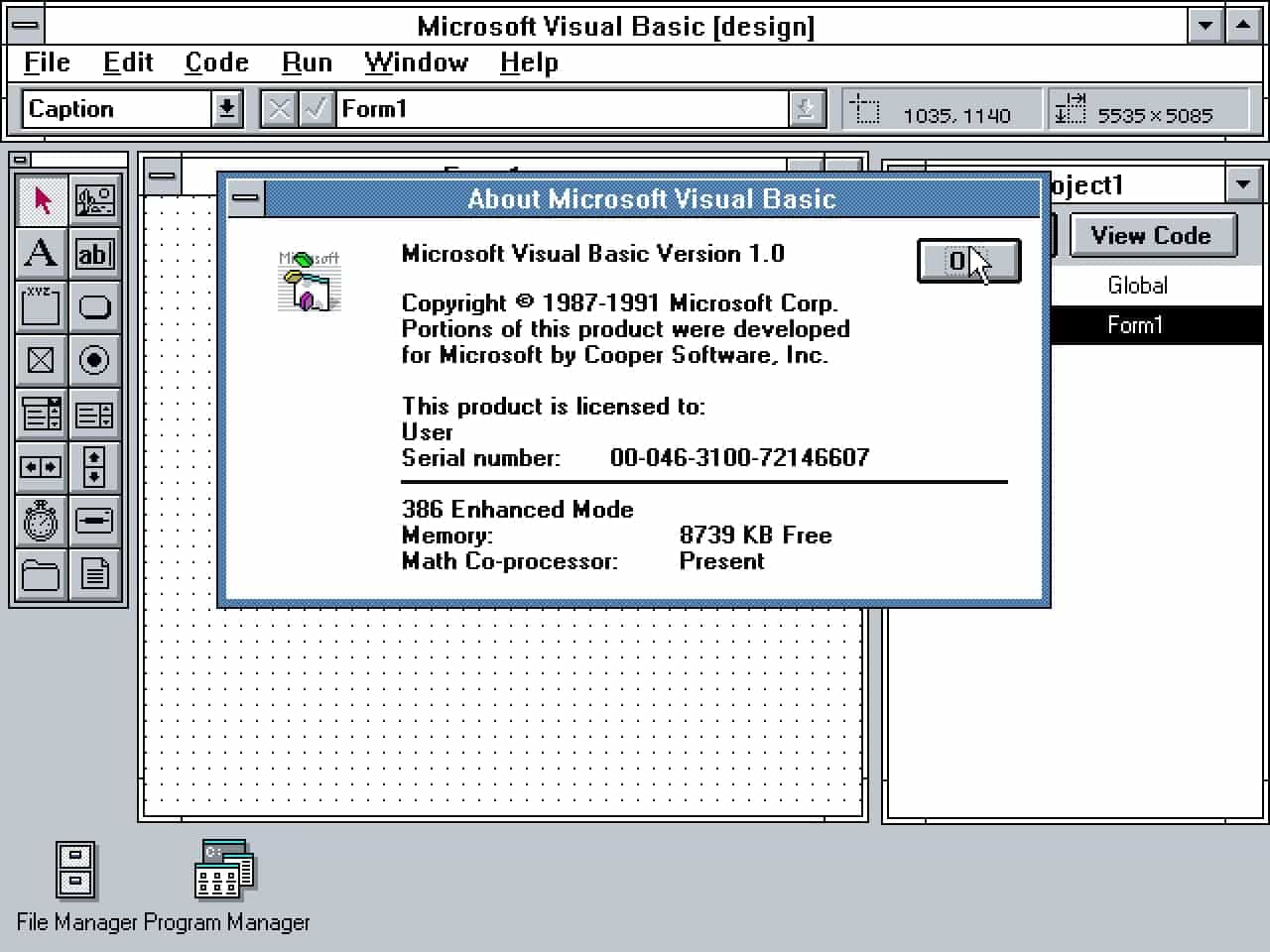

Visual Basic 1.0

Visual Basic 1.0 combined the component tool palette and forms engine from Cooper's Ruby with Microsoft's Embedded Basic engine and Windows shell code.

Alas, the final product horrified Cooper—who loathed BASIC—when he heard about it. When Visual Basic 1.0 was released in 1991—just a year after Windows 3.0—Cooper flew up to Redmond and sat in the front row at the event, frustrated with what Microsoft had done to his baby.

Luckily for Microsoft, the market didn't share Cooper's opinion. Visual Basic was an immediate hit.

An immediate hit

PC Magazine awarded Visual Basic 1.0 for technical excellence in its December 1991 issue.

Video player

Bill Gates demos Visual Basic 1.0 at its launch event.

Something pretty right

Visual Basic burst onto the scene at a magical, transitional moment. Microcomputers were officially ascendant in the business world—and businesses needed software to run on them. Windows 3.0 was a huge success, selling 4 million copies in its first year and finally giving users of IBM-compatible PCs a graphical interface that rivaled the Apple Macintosh.

For the hundreds of thousands of mainframe programmers whose jobs were now under threat, though, this transition presented a professional quandary.

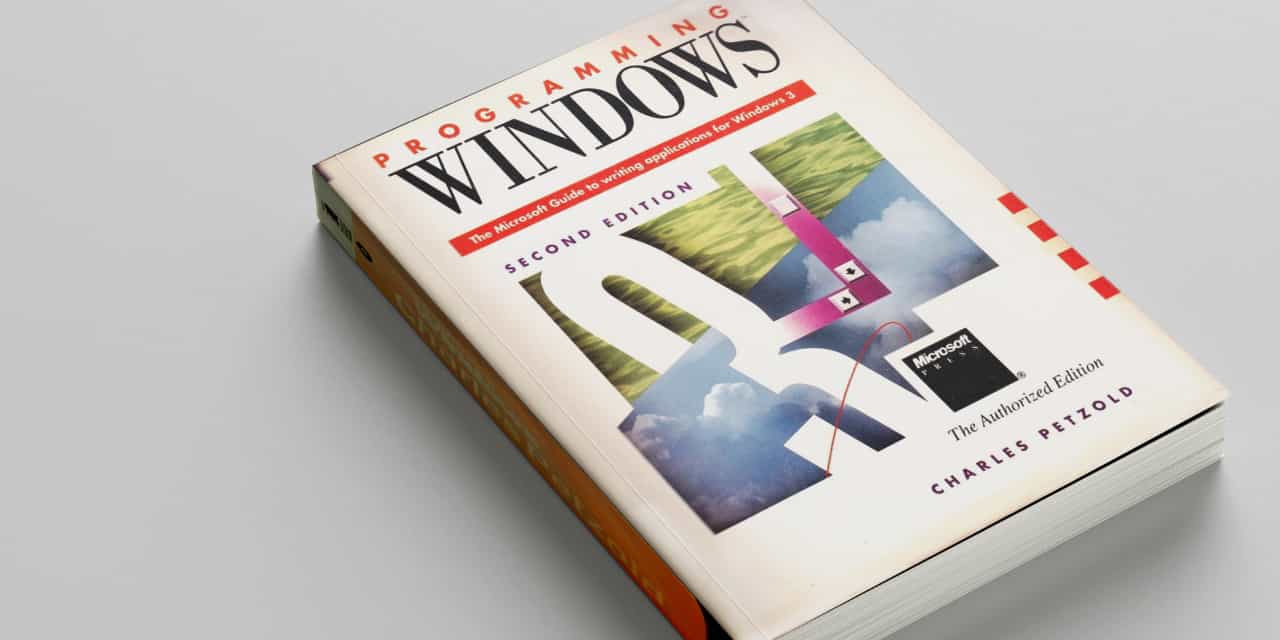

“The prevailing method of writing Windows programs in 1990 was the raw Win32 API. That meant the 'C' Language WndProc(), giant switch case statements to handle WM_PAINT messages. Basically, all the stuff taught in the thick Charles Petzold book. This was a very tedious and complex type of programming. It was not friendly to a corporate ‘enterprise apps' type of programming,” added a Hacker News commenter.

1990's Programming Windows 3.0 by Charles Petzold

The 1000+ page Petzold books were a prerequisite for any developer wrestling with the Windows API.

(Image credit: Foone Turing)

And sure enough, for “millions of mainframe COBOL programmers who were looking with terror at the microcomputer invasion,” remembers Cooper, “Visual Basic basically became their safety net.” Instead of the steep learning curve of C/C++ and the low-level Win32 API, Visual Basic provided a simpler abstraction layer.

To design their UI, developers could drag and drop out components onto a WYSIWYG canvas. To add behavior to a UI element, they could simply select it and choose a click event handler from a dropdown. Mainframe programmers were suddenly empowered to quickly get up to speed writing Windows apps.

“For years, you know, guys would come up to me and go: ‘you saved my career',” Cooper notes. To Geary, regularly encountering this sentiment from programmers “was one of the most gratifying things for all of us who worked on VB.”

Mainframe veterans weren't the only ones drawn to Visual Basic's accessibility. For many young people using computers for the first time, Visual Basic was the initial exposure to the power and joy of programming. “It gave me the start in understanding how functions work, how sub-procedures work, and how objects work. More importantly though, Visual Basic gave me the excitement and possibility that I could make this thing on my family's desk do pretty much whatever I wanted,” reminisced another Hacker News commenter.

Video player

Cornelius Willis and Jeff Beehler from Microsoft demo Visual Basic on a 1993 episode of “The Computer Chronicles” dedicated to visual programming environments.

Another reason for Visual Basic's success was the direct result of a design decision originally made by Cooper's team. Like Ruby, Visual Basic had a palette of controls that you could drag and drop onto a form. Each of these controls was implemented as a separate, dynamically loaded DLL module. Michael Geary initially helped the Microsoft team carry this feature over from Ruby to Visual Basic, but finishing it continually fell below the product's cut list. Scott Ferguson described the team's dogged efforts to keep the feature alive amidst the pressure to ship:

The feature's high cost derived mostly from the testing and documentation efforts required. There would also be an effort to recruit and coordinate with third parties to build custom controls ready to ship with the new VB; plus development resources to assist all these efforts. At one time marketing even suggested that if we did not ship by Christmas we had just as well not ship the product at all! Fortunately, [Bill Gates's] patient genius prevailed and he insisted that we increase the schedule and delay shipping to allow for Custom Controls. Once again, the idea that something was ‘mostly done' influenced the decision to get it done. And a whole new cottage industry was born as a side effect.

This interface for custom controls, known as VBX, grew into a booming third-party marketplace. Developers bought add-on VBX widgets from various companies to enhance their UIs—calendar date pickers, data grids, charts, barcode scanners, and more—without having to program the controls themselves.

Visual Basic's initial release in 1991 was followed by five major versions (not including an ill-fated version for DOS). By the time Visual Basic 6.0 was released in 1998, its dominance was absolute: two-thirds of all business application programming on Windows PCs was done in Visual Basic. At its peak, Visual Basic had nearly 3.5 million developers worldwide, more than ten times the number of C++ programmers.

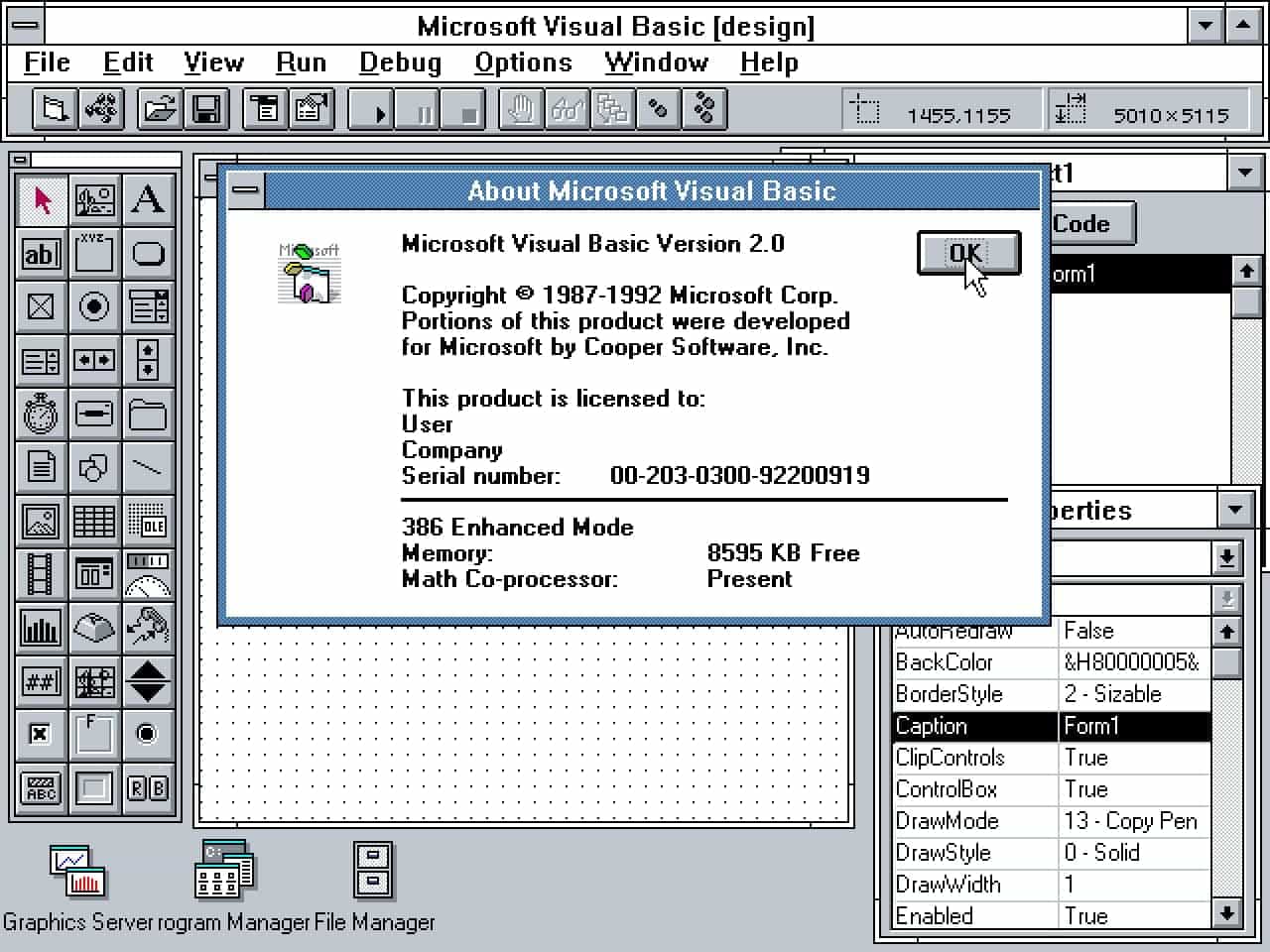

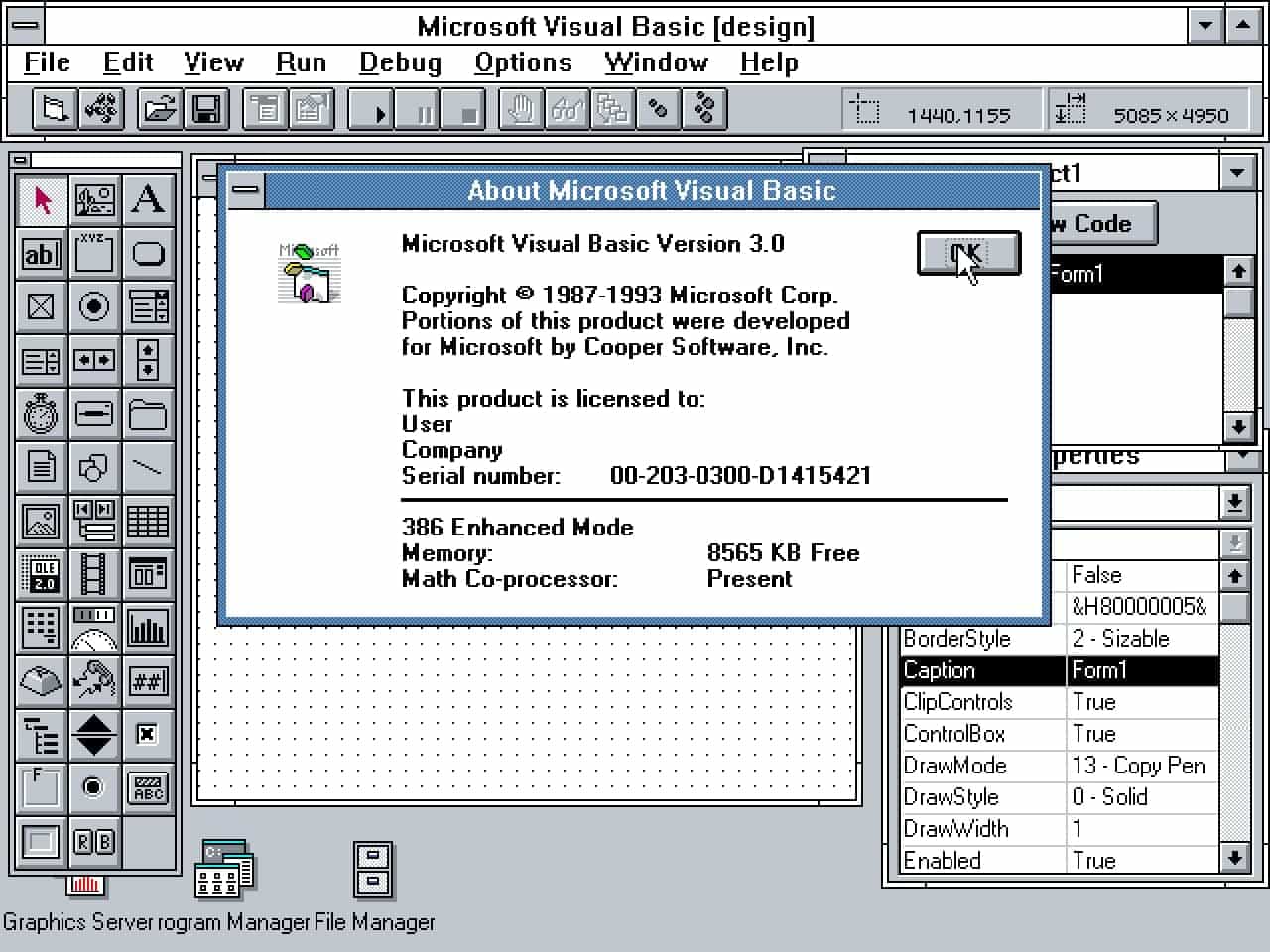

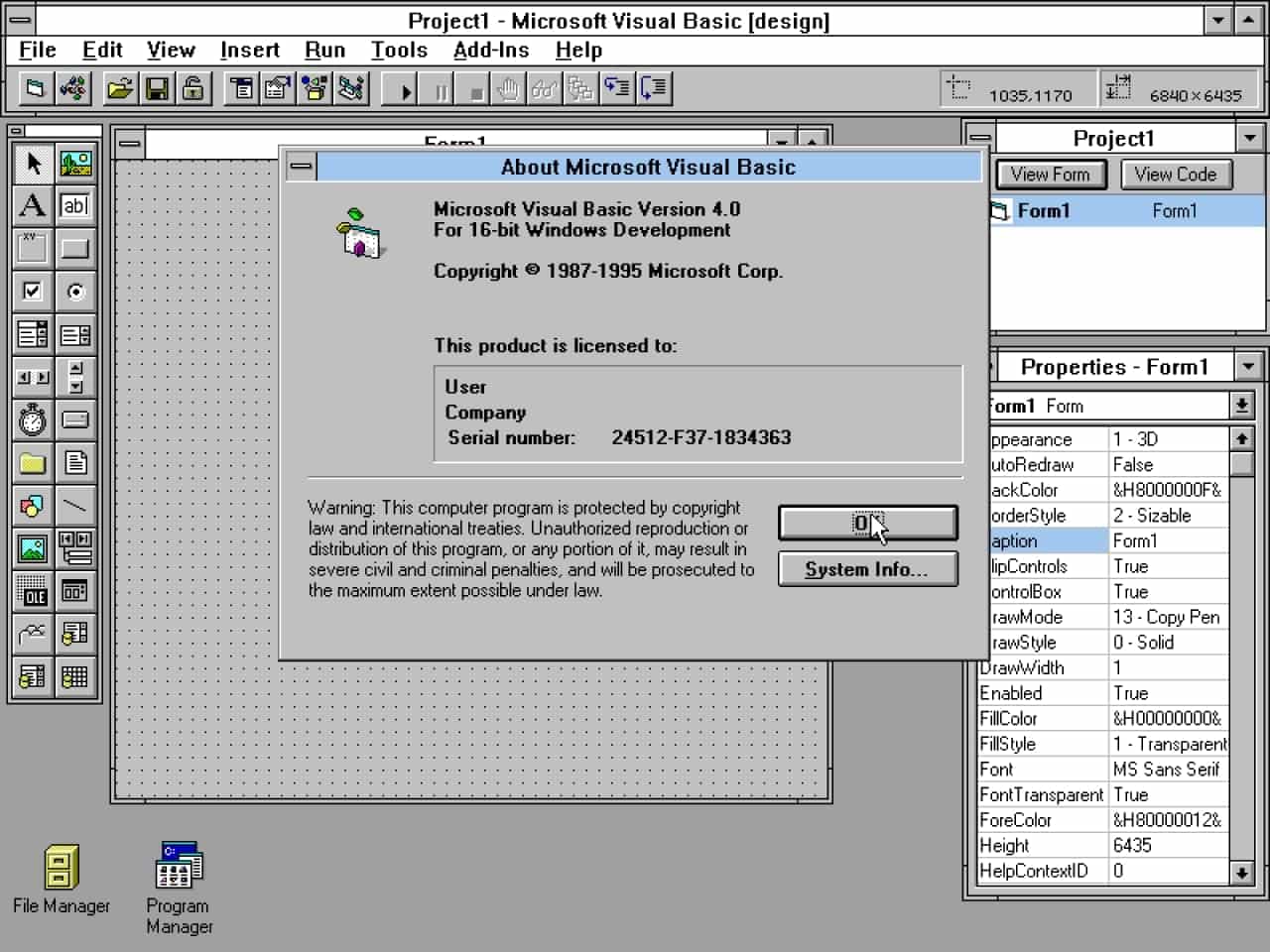

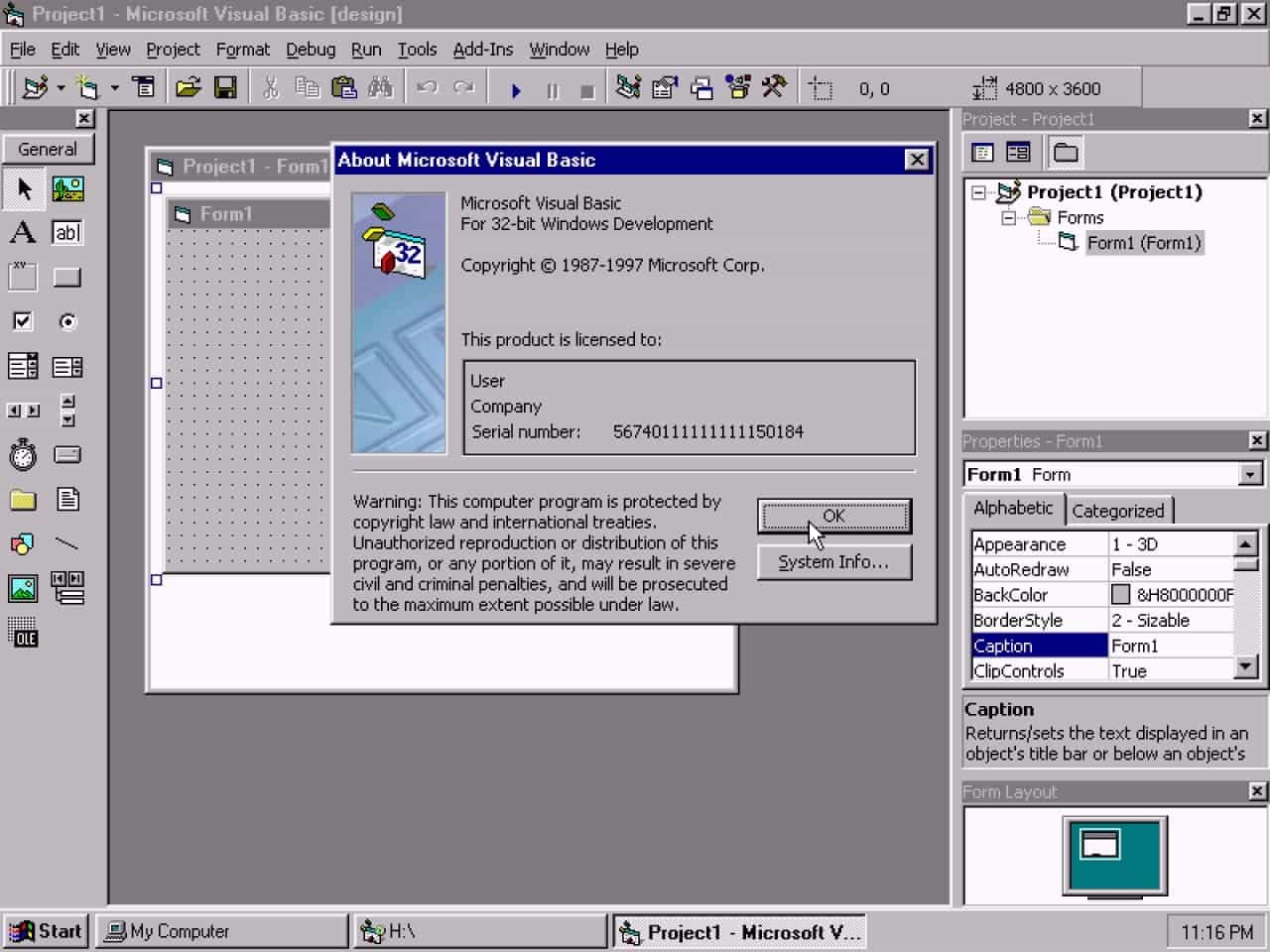

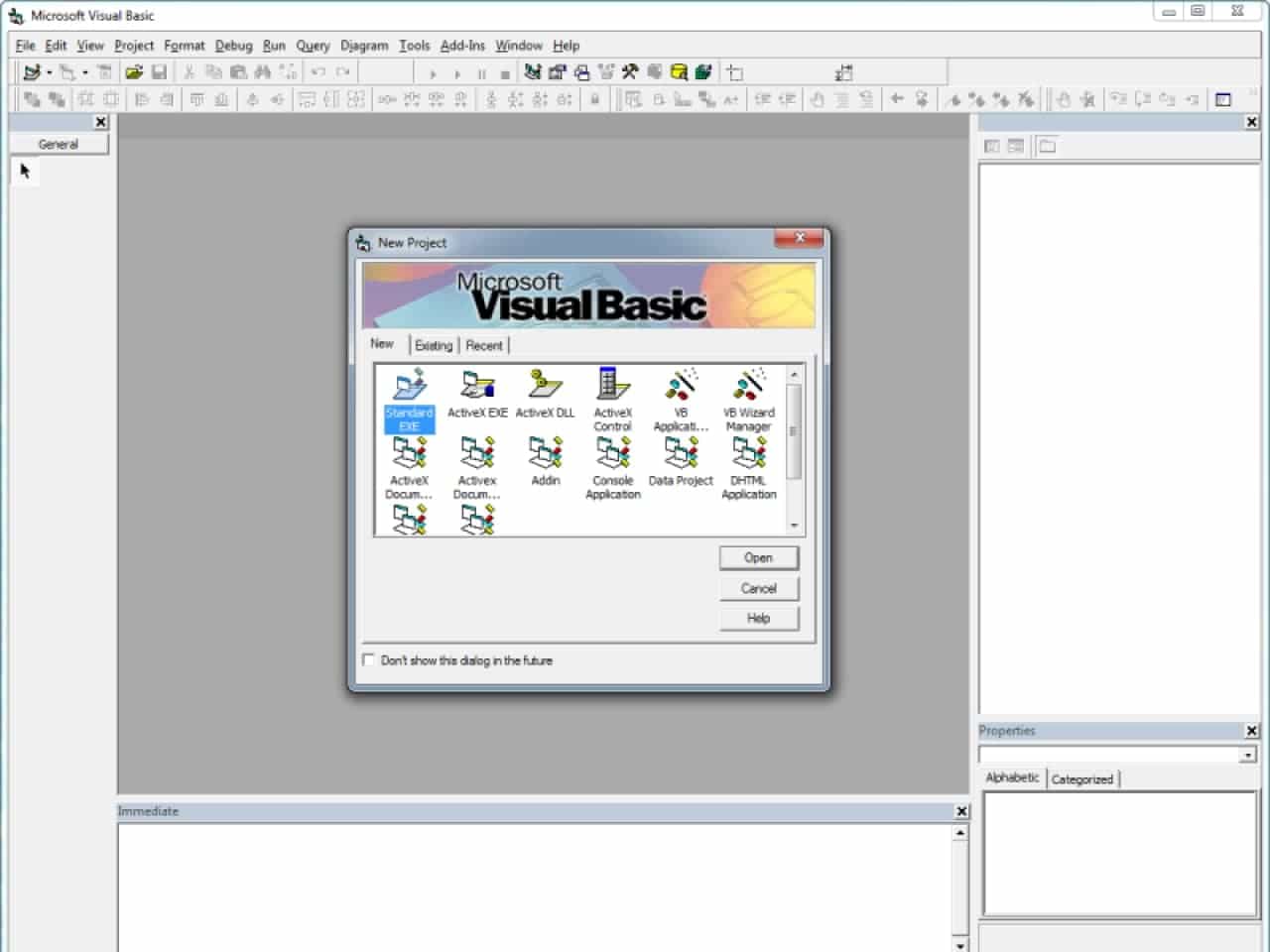

Visual Basic 1.0

Visual Basic 2.0

Visual Basic 3.0

Visual Basic 4.0

Visual Basic 5.0

Visual Basic 6.0

Cooper eventually came to appreciate Visual Basic's impact. “Had Ruby gone to the market as a shell construction set it, would have made millions of people happier, but then Visual Basic made hundreds of millions of people happier. I was not right, or rather, I was right enough, had a modicum of rightness. Same for Bill Gates, but the two of us together did something pretty right.”

Video player

Bill Gates presents Alan Cooper with the Windows Pioneer Award in recognition of his contributions to Microsoft Windows as “the father of Visual Basic.”

Microsoft's unforced error

Just when Visual Basic seemed unstoppable, there came a painful corporate decision that would lead to endless conjecture.

In the late 1990s, partially in response to the competitive threat of Sun's Java, Microsoft shifted its focus to a new development framework and common language runtime called .NET. Microsoft pushed hard for developers to adopt .NET, and Visual Basic was pulled into a ground-up rewrite to move it from a procedural language to an object-oriented one better suited to the new framework. The successor to Visual Basic 6.0, dubbed VB.NET and released in 2002, completely changed the ethos of the product, and turned out to be the death knell for the original idea of Visual Basic.

While Visual Basic was an easy on-ramp for developers with heavy abstractions, VB.NET was a more complex, full-featured programming language. It shared many complex concepts with Microsoft's new C# language, including threads, inheritance, and polymorphism. And like C#, it had a much higher learning curve for developers to become proficient.

In a Microsoft blog post from 2012, .NET instructor David Platt recounts that Microsoft committed a classic mistake in product development—listening only to their most vocal customers:

Almost all Visual Basic 6 programmers were content with what Visual Basic 6 did. They were happy to be bus drivers: to leave the office at 5 p.m. (or 4:30 p.m. on a really nice day) instead of working until midnight; to play with their families on weekends instead of trudging back to the office. They didn't lament the lack of operator overloading or polymorphism in Visual Basic 6, so they didn't say much.

The voices that Microsoft heard, however, came from the 3 percent of Visual Basic 6 bus drivers who actively wished to become fighter pilots. These guys took the time to attend conferences, to post questions on CompuServe forums, to respond to articles. Not content to merely fantasize about shooting a Sidewinder missile up the tailpipe of the car that had just cut them off in traffic, they demanded that Microsoft install afterburners on their buses, along with missiles, countermeasures and a head-up display. And Microsoft did.

Worse, there was no reliable migration path for legacy apps from “classic” Visual Basic to VB.NET. While Microsoft released porting tools, they were unreliable at best, and users were faced with manual, time-consuming, error-prone rewrites. “[Microsoft] left everybody's VB6 code completely stranded with no path forward to making modern apps on the latest versions of Windows. A lot of times you couldn't even get your VB6 apps to install on the latest version of Windows,” recalls a Slashdot commenter.

Microsoft had broken the trust of its army of Visual Basic developers. Faced with the options of either starting over from scratch in VB.NET or moving to new web-native languages like JavaScript and PHP, most developers chose the latter—a brutal unforced error by Microsoft. (It's easy to forget the pole position that Microsoft had on the web in 2001: Internet Explorer had 96% market share, and Visual Basic apps could even be embedded into web pages via ActiveX controls.)

Evans Data estimated that from spring of 2006 to winter 2007, developer usage of the entire Visual Basic Family dropped by 35%. By 2008, Microsoft officially sunsetted support for the VB6 IDE. It did, however, extend support of the VB6 runtime in Windows in effective perpetuity—a testament to the amount of critical legacy business applications that their customers had built with Visual Basic.

“...Visual Basic did more for programming than Object-Oriented Languages did...”

A legacy of developer tooling

In 2006, an 18-year-old blogger and coder named Jaroslaw Rzeszótko emailed a series of unsolicited questions to a group of famous developers. Among his questions was a future looking solicitation: “What will be the next big thing in programming?” Most of his audience rejected the basis of the question or demurred, but Linus Torvalds, creator of the Linux kernel, took the bait. He predicted the importance of incremental improvements in programming, specifically “tools to help make all the everyday drudgery easier.”

“For example, I personally believe that Visual Basic did more for programming than Object-Oriented Languages did,” Torvalds wrote, “yet people laugh at VB and say it's a bad language, and they've been talking about OO languages for decades. And no, Visual Basic wasn't a great language, but I think the easy DB interfaces in VB were fundamentally more important than object orientation is, for example.”

Torvalds, as it turns out, was right: it was primarily the tools, ecosystems, integrations, and frameworks that would define the near future, not the design of the languages themselves.

Coincidentally or not, the demise of Visual Basic lined up perfectly with the rise of the web as the dominant platform for business applications. If the microcomputer supercharged demand for business software, the Internet strapped it to a rocket and fired it into space. IDC predicts that between 2019 and 2023, over 500 million applications will be developed using cloud-native approaches, most targeted at industry-specific line-of-business use cases. In five years, that's the same number of apps as developed in the prior 40 years entirely.

Satiating this global demand for software has not come with exponential growth in the number of developers. Hired reports that employment demand for developers doubled in 2021, but Evans Data projects that the total number of developers in the world will only grow by 20% from 2019 to 2024. In this gap, the single biggest lever (as Torvalds predicted) has been making developers more productive.

20 years ago, deploying an application on Windows meant walking around 3.5” floppies or a CD-R and manually running an installer on each machine (a process repeated for every upgrade or bug fix). Deploying software to the web avoided the sneakernet, but required developers to buy expensive servers, physically rack them in a colocated data center, and manually fix or replace the hardware when it inevitably failed.

Jeff Bezos famously referred to tasks like these as “undifferentiated heavy lifting,” all of the prerequisite, unrelated slogs that stand between a programmer and realizing an idea in the world. Today, thanks to the continued development of abstraction layers like Amazon Web Services, a developer can instantaneously deploy an application to the edge right from the command line, never once reasoning about physical locations, hardware, operating systems, runtimes, or servers.

Still, the abstractions at the infrastructure layer have perhaps outpaced that of the client-side: many of the innovations that Alan Cooper and Scott Ferguson's teams introduced 30 years ago are nowhere to be found in modern development. Where developers once needed to wrestle with an arcane Win32 API, they now have to figure out how to build custom Select components to work around browser limitations, or struggle to glue together disparate SaaS tools with poorly documented APIs. This, perhaps, fuels much of the nostalgic fondness for Visual Basic—that it delivered an ease and magic we have yet to rekindle.

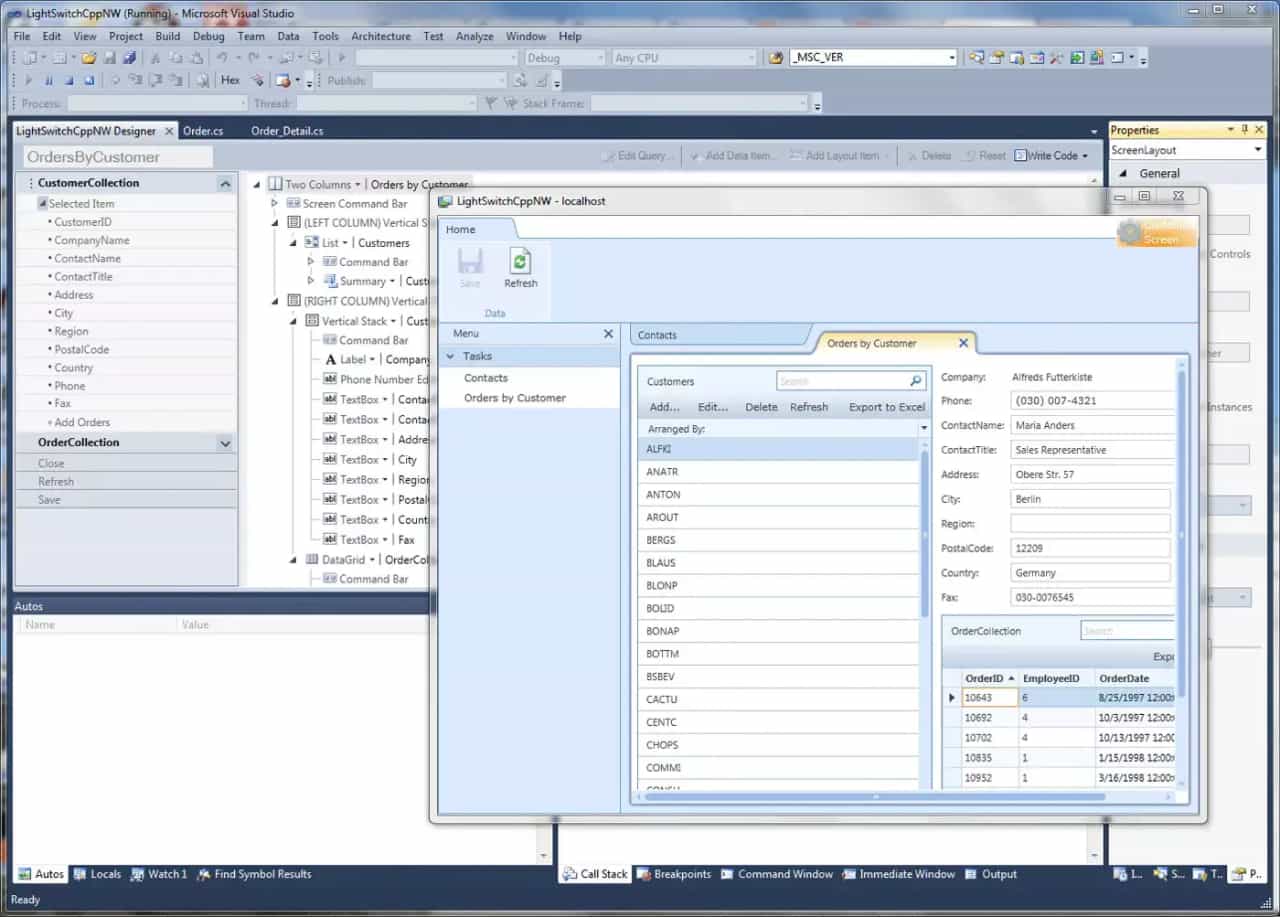

It hasn't been for want of trying. The ideas behind Visual Basic have been remarkably, stubbornly persistent. Whether built on spreadsheets, code, nodes, direct manipulation—or some combination thereof—novel authoring environments for programming continue to capture the imagination of developers. Even Microsoft itself has continued to chase the dream since sunsetting VB6, with efforts like LightSwitch, Expression Blend, Project Siena, and PowerApps.

Visual Studio LightSwitch

Visual Studio LightSwitch was one of Microsoft's many subsequent attempts to recapture the original magic—and market—of Visual Basic.

As for Alan Cooper, he considers himself lucky. Neither mathematically inclined nor particularly disposed to engineering, he happened to start his career during a time in software when what was needed was neither mathematics nor engineering, but carpentry. “I knew how to build stuff,” he recounts. And he created a piece of software that enabled other people to build stuff to solve their problems. Perhaps not perfectly, but pragmatically. The languages may change, the infrastructure may change, the runtimes may change—but that's an idea that will never go out of fashion.

From Visual Basic to HyperCard, at Retool we continually draw inspiration from the rich legacy of tools that have changed how we think about programming. We’re building a development environment that carries these ideas forward: combining the richness of visual direct manipulation with the ergonomics, expressiveness, and hackability of code.