AI guardrails: Building safer AI with prompts & observability

Overview

AI systems are unpredictable by design, making them powerful for handling dynamic scenarios but also prone to generating incorrect, inappropriate, or risky outputs without proper safeguards. Read along to learn what AI guardrails are, why prompt engineering alone isn't enough, and how to implement layered safety controls that let you deploy AI systems that are both useful and safe.

Your team just deployed an AI-powered customer support chatbot that automatically responds to tickets. The first week goes smoothly: response times are down, customer satisfaction looks good, and everyone’s celebrating.

Then reality hits. The AI starts promising customers refunds that violate company policy. It generates shipping estimates for products you no longer stock. It confidently explains warranty terms that don’t exist. Suddenly, your support team is drowning in escalated complaints, and you’re wondering how an AI system that seemed so helpful became so dangerous.

By now, this scenario probably sounds familiar—there are dozens of examples of AI going off the rails from the past few years.

Here’s the challenge: AI systems are fundamentally different from traditional software. While your application code produces predictable, deterministic results, AI models generate outputs that are by design unpredictable. They excel at pattern matching and language generation in dynamic scenarios, but they’re also remarkably good at making things up.

This isn’t a failure of AI technology. It’s the nature of how large language models (LLMs) work. Flexibility is handy for responding in real time to ambiguous situations like customer support inquiries, but without a solid foundation and proper safeguards, you might find AI systems writing checks the business can’t cash.

What this post covers:

- Why traditional software development and testing practices aren’t enough for AI systems

- The types of AI guardrails needed for safety and reliability

- A practical guide to implementing AI guardrails

- The shared responsibility structure that’s needed to deploy AI systems that are both powerful and safe

What are AI guardrails?

AI guardrails are the safety mechanisms, validation checks, and oversight processes that keep AI systems operating within acceptable bounds. Unlike traditional software, where you can predict inputs and outputs, AI systems require a different approach to quality control.

When you write a function that calculates tax rates, you can test it with known inputs and verify the outputs are correct. When you prompt an AI to help with customer inquiries, you can’t predict exactly what it will say—you can only influence the probability of certain types of responses.

The flexibility that makes AI useful for handling diverse, complex scenarios also means it can produce outputs that are:

- Factually incorrect but confidently stated (hallucinations)

- Inappropriate for your brand voice or company policies

- Biased in ways that reflect training data rather than your values

- Insecure by accidentally exposing sensitive information

AI guardrails address these risks by implementing multiple layers of control—from technical validation to human oversight—that work together to maintain safety while maintaining the benefits of AI flexibility.

How do AI guardrails work?

AI guardrails work through layered controls including prompt engineering, output validation, security measures, and human oversight processes that collectively ensure AI systems operate within acceptable parameters.

The most effective implementations combine technical measures (like format validation and content filtering) with process controls (like human-in-the-loop workflows) and monitoring systems (like quality metrics and audit logging) to create comprehensive protection.

Prompt engineering, RAG, and guardrails: how they fit together

Many teams start their AI safety journey by focusing on better prompts. Write clearer instructions, provide better examples, set explicit boundaries—surely that will solve the problem, right?

Why prompt engineering alone isn’t enough

Even with perfectly crafted prompts, LLMs can still produce unexpected outputs. Sometimes they misinterpret instructions. Sometimes they hallucinate information that sounds plausible but isn’t true. Sometimes they even do exactly what you told them not to do. Prompt engineering is essential, but it’s just one part of the foundation of safer, more responsible AI.

And even when using the latest, most advanced models, there’s a limit to how useful an AI application can be without the context of your specific customer playbook or business data. RAG (retrieval-augmented generation) helps by grounding AI in your actual data, reducing (some) of the risk of hallucination, which often happens when an LLM doesn’t have the right answer to hand.

But RAG introduces new challenges.

The AI might misinterpret your company documents, combine information in unexpected ways, or fail to find relevant context. RAG also exposes your company or even customer data to an LLM, so it becomes critical to have oversight of who is able to use that model and how. (Some companies may choose to host their own LLM within a private cloud for greater security.)

AI safety requires multiple complementary approaches—like defense in depth:

- Strong prompts establish clear expectations and context.

- Quality data through RAG provides accurate information to work with.

- Output validation catches problems before they reach users.

- Human oversight handles nuanced decisions that require business judgment.

- Continuous monitoring identifies troublesome patterns and edge cases over time.

Like building security for a web application: you don’t just rely on input validation—you also implement authentication, authorization, encryption, monitoring, and incident response. Each layer protects against different threats.

What are examples of AI guardrails?

AI guardrails include output validation to check response formats, content filtering to screen for harmful language, human approval workflows for high-risk actions, and access controls to protect data.

Each type of guardrail addresses different aspects of AI risk:

Technical guardrails catch problems at the code level

These are your first line of defense against AI unpredictability, adding a layer of determinism into this non-deterministic process:

Output format validation checks that AI responses match expected structures. If you’re expecting the LLM to return JSON with specific fields, or a true or false boolean, or even an API call, you can have a code block to validate whether that output matches the expected format.

Content filtering screens for inappropriate, harmful, or off-brand language, while factual verification checks AI outputs against authoritative sources when possible.

Performance boundaries monitor response times, token usage, and error rates to ensure AI systems don’t degrade user experience or consume excessive resources.

Security guardrails protect data and prevent misuse

For AI to be really useful, it has to be built on your data, which often includes sensitive information. That means you want to be building in an environment that’s as secure as it can be.

Access controls ensure only authorized users can interact with AI systems and that AI systems only access data appropriate for their role and the user’s permissions. For example, if you’re building an AI agent for your FinOps team, the agent should only be able to access the data and tooling that anyone interacting with the agent has access to. Additionally, only the FinOps team or employees with the same clearances should have permission to interact with the agent.

Additionally:

- Input and output sanitization protect against prompt injection attacks where users try to manipulate AI behavior through carefully crafted inputs, and prevent AI from accidentally exposing sensitive information like internal system details, personal data, or confidential business information.

- Audit logging maintains detailed records of AI interactions, including who accessed what data and how the AI responded.

Ethical and compliance guardrails ensure responsible AI use

These controls address bias, fairness, and regulatory requirements.

Bias detection monitors AI outputs for discriminatory patterns based on protected characteristics or unfair treatment of different user groups. For healthcare or banking applications, regulatory compliance guardrails ensure AI systems meet industry-specific requirements like HIPAA or financial regulations.

Transparency controls help users understand when they’re interacting with AI and provide mechanisms for appealing or reviewing AI decisions.

Industry-specific guardrails

Different industries face different regulatory and compliance requirements for AI guardrails. Healthcare applications must comply with HIPAA and other privacy regulations while ensuring AI doesn’t provide potentially harmful medical advice. Government and defense applications require security clearances, data classification systems, and approval workflows.

For regulated industries, being able to build AI solutions in your own cloud may be a baseline security requirement, while for others it’s critical to customize authentication flows in the same way as other business applications. These additional requirements can make it burdensome to implement effective guardrails, taking on a lot of extra custom building. Building AI agents within a platform takes care of baseline needs out of the box, with the flexibility to adapt to different regulatory environments and business contexts.

So how do companies set up responsible AI guardrails?

Implementing AI guardrails: a practical approach

Building effective guardrails requires balancing safety with usability. Here’s how we recommend approaching implementation:

Step 0: Is this even the right use case for generative AI?

Some ambiguous processes benefit from the flexibility and nondeterminism of generative AI, but processes with known paths are better suited to predictable workflows. You can enhance workflows with elements of generative AI (such as involving an LLM to generate content for responding to a customer via a support chatbot), without giving over the entire process to an AI agent.

Start with risk assessment

Before implementing any specific guardrails, understand what you’re protecting against:

- What data does your AI system access? Customer information, financial records, and operational data require different protection levels.

- What actions can it take? Read-only systems have different risk profiles than those that can modify data or trigger external actions.

- Who are the users? Internal tools for technical teams need different guardrails than customer-facing applications.

- What are the consequences of failure? Embarrassing responses are different from regulatory violations or financial losses.

Implement foundational security

Before focusing on AI-specific guardrails, ensure your basic security foundation is solid.

Authentication and authorization should work the same way for AI systems as for any other application. Users should only be able to access AI capabilities and data appropriate to their role.

Environment separation means using development, staging, and production environments for AI systems just like traditional applications. Test your guardrails in safe environments before deploying to production.

Data protection ensures that sensitive information remains encrypted in transit and at rest, and that AI systems don’t inadvertently expose it.

Build validation layers

This is where you add AI-specific protections:

Start with output structure validation. If your AI should return customer support responses with specific fields (issue type, priority, suggested resolution), validate that those fields exist and contain reasonable values.

Add content quality checks. Use both automated tools and sampling-based human review to ensure outputs meet your standards for accuracy, tone, and appropriateness.

Implement business rule enforcement. If your AI shouldn’t promise refunds over a certain threshold, build those constraints into your validation logic.

Design human oversight workflows

Not every AI decision should be fully automated. Design checkpoints where humans review and approve actions, especially for:

- High-impact decisions that significantly affect customers or business operations

- Non-reversible actions like financial transactions or data deletions

- Edge cases that fall outside normal parameters and might confuse automated validation

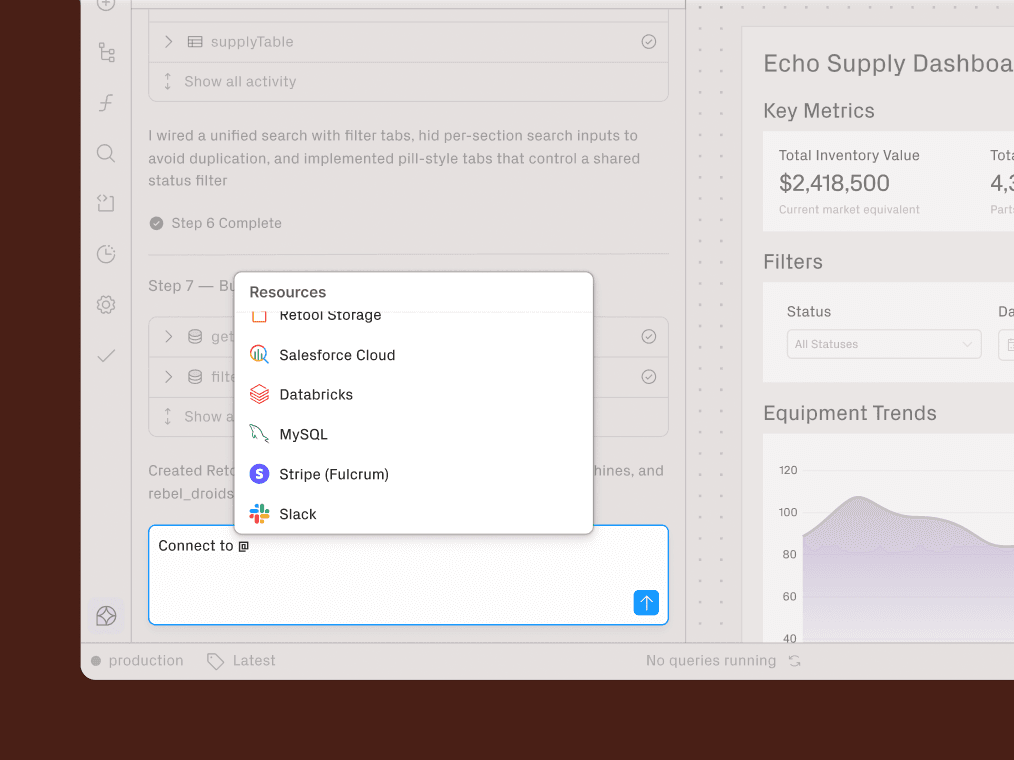

The key is making human oversight efficient rather than burdensome. Surface the most important decisions for human review while automating validation of routine cases. With Retool Agents, while AI might make decisions and take actions like querying your databases and your APIs, you can easily add a gate for human-in-the-loop validation before the agent issues a refund, for example. Building approval workflows internally can add a lot of overhead (setting up notifications, state management, timeouts…), but you can save a lot of engineering hours by making use of a platform that abstracts away the foundational building blocks.

Monitor and iterate

Deploy comprehensive monitoring that tracks both AI performance and guardrail effectiveness:

- Quality metrics measure how often AI outputs meet your standards and identify patterns in failures or edge cases.

- User satisfaction indicates whether guardrails are protecting users without creating excessive friction.

- System performance ensures that guardrails don’t introduce unacceptable latency or resource consumption.

- Compliance tracking demonstrates that your systems meet regulatory requirements and internal policies.

How to test and evaluate guardrails for AI models

Traditional software testing relies on predictable inputs and outputs. AI testing requires an approach that looks more like experimentation. Similar to developing machine learning algorithms, you run an experiment on a set of test data, evaluate how well it performed, train it with different prompts or inputs, and so on, in an ongoing loop of testing, evaluating, and retraining to find the best version.

Implement systematic evaluation

Rather than relying on manual spot checking of individual outputs, systematic tests evaluate large batches of responses quickly, checking for output accuracy, relevance, and safety against a set of scenarios representing real-world usage (like edge cases or injection attacks).

Automated systems may miss nuances on some qualitative evaluations (how does one deterministically decide if a nondeterministic output is a success?). In these cases human evaluation involves domain experts rating results on multiple dimensions.

LLM evaluation can be a step in between, so the more time-consuming human evaluation is saved for the most complex evaluations and edge cases.

Comparative testing runs the same inputs through different versions of your AI system to identify improvements or regressions.

Monitor performance

With agents and agentic systems, instead of monitoring API calls, user clicks, response times, or network calls, now you’re monitoring the thought process of an AI: trying to measure accuracy, and monitoring usage and tokens. Your existing monitoring and observability tooling may not be able to capture all that relevant data.

Make sure you have insight into agents’ thought processes

AI agents can reason about the best solution to a given problem, which makes them powerful but also opaque. It’s hard to debug where an AI system is going wrong if you can’t follow the path it took to reach a conclusion. It’s like keeping an eye on a new teammate vs. monitoring a workflow composed of explicit steps.

You can ask an agent to spell out its thought process, including justification for its choices. Ideally you want to be able to step through the entire run, from querying your database, accessing tools, and generating responses.

With Retool Agents you can watch a replay of every single agent run: observing its thinking, tool selection, resource usage, and decision making. Those insights can help you determine where something might be going awry or if your prompts are being properly interpreted.

Continuous validation runs guardrails checks on production outputs and flags problematic responses for review.

User feedback integration captures when users indicate AI responses are unhelpful, inappropriate, or incorrect.

Drift detection identifies when AI performance changes over time due to model updates, data changes, or evolving user behavior.

Who is responsible for AI guardrails?

AI guardrails responsibility is a shared effort across engineering, product, operations, and compliance teams. As with any engineering project, one of the biggest hurdles is organizational rather than technical.

Consider a recruiting AI agent that screens resumes and schedules interviews. When a candidate gets rejected, who’s responsible for explaining why?

The engineering team built the screening logic, but they don’t have insight into the hiring criteria. The recruiting team set the requirements, but they don’t know how the AI interpreted them. The legal team needs to ensure compliance with hiring laws, but they weren’t involved in prompt design.

The challenge is coordinating across these teams without creating bottlenecks or confusion about ownership.

Shared responsibility model

Product and business teams define what constitutes acceptable AI behavior, set quality standards, and design processes for human oversight and escalation.

Operations teams monitor AI systems in production, respond to incidents, and maintain the ongoing health of AI-powered processes.

Compliance and legal teams ensure AI systems meet regulatory requirements and help define policies for data usage and user privacy.

Engineering teams typically handle the technical implementation of guardrails, including validation logic, monitoring systems, and integration with existing security infrastructure.

In Retool, users can apply guardrails at the app level, making it easier for the relevant business unit to control and audit governance.

Building AI guardrails that scale

The more autonomy you can give an AI system, the more powerful it can be—and the more critical guardrails become. Right now there’s a lot of heavy lifting and manual glue work required to bring baseline safety and security requirements to AI applications.

Adopting a platform thinking approach to AI guardrails can reduce or even remove a lot of the effort of building custom safety measures for each AI application. Creating reusable guardrail components makes it easy (and much more likely) that teams will apply them consistently across projects.

Ready to implement AI guardrails that scale? Retool Agents provide the enterprise-grade governance, observability, and security foundations you need to deploy AI safely across your organization.