Enterprise AppGen is here: AI-powered app generation designed to scale: fast, secure, and production-ready from the moment you hit “build.”

The current crop of LLMs (large language models) are perhaps the greatest generalists to ever digitally live. Trained on billions of words from across the web, you can ask them about Llamas or pajamas or even llamas in pajamas—they seem to know it all.

But great generalists are not always great specialists. Anyone who’s used an LLM like, say, ChatGPT, Claude, or Gemini for specific asks will know that even very robust LLMs don’t always have the full context on a topic, or that they’re only trained on data available up to a certain date. They can miss nuance and detail and up-to-date information that would make their responses more valuable.

This is where retrieval-augmented generation, or RAG, comes in. RAG is a technique used alongside LLMs to enhance their response with specific, current, and relevant information. By allowing you to add domain-specific information and data from your company or field to your AI, RAG can be a huge advantage when you’re building AI applications for your business.

RAG allows you to add more detail and knowledge to any LLM answer by giving it access to data it otherwise wouldn’t have context into.

Let’s take Retool as an example. Say we wanted to build an AI assistant that helped users understand Retool AI better. If we just used GPT-4 or a Claude model, that might work. But these models were trained when Retool AI was nascent, so these models might not have the latest information.

It would be better if we could make sure the model was using Retool AI documentation to answer queries, combining the natural language interface of AI with the domain-specific knowledge of the doc.

We can do that with RAG. Try this app that uses Retool AI docs to answer questions.

So, how do you add this information to an AI model?

Well, the neat thing about RAG is that you don’t. Unlike fine-tuning, where you adjust and update the model’s parameters to adapt it to a specific task or domain, with RAG, you simply provide the model with access to a separate knowledge store, allowing it to retrieve and incorporate the most relevant information on the fly.

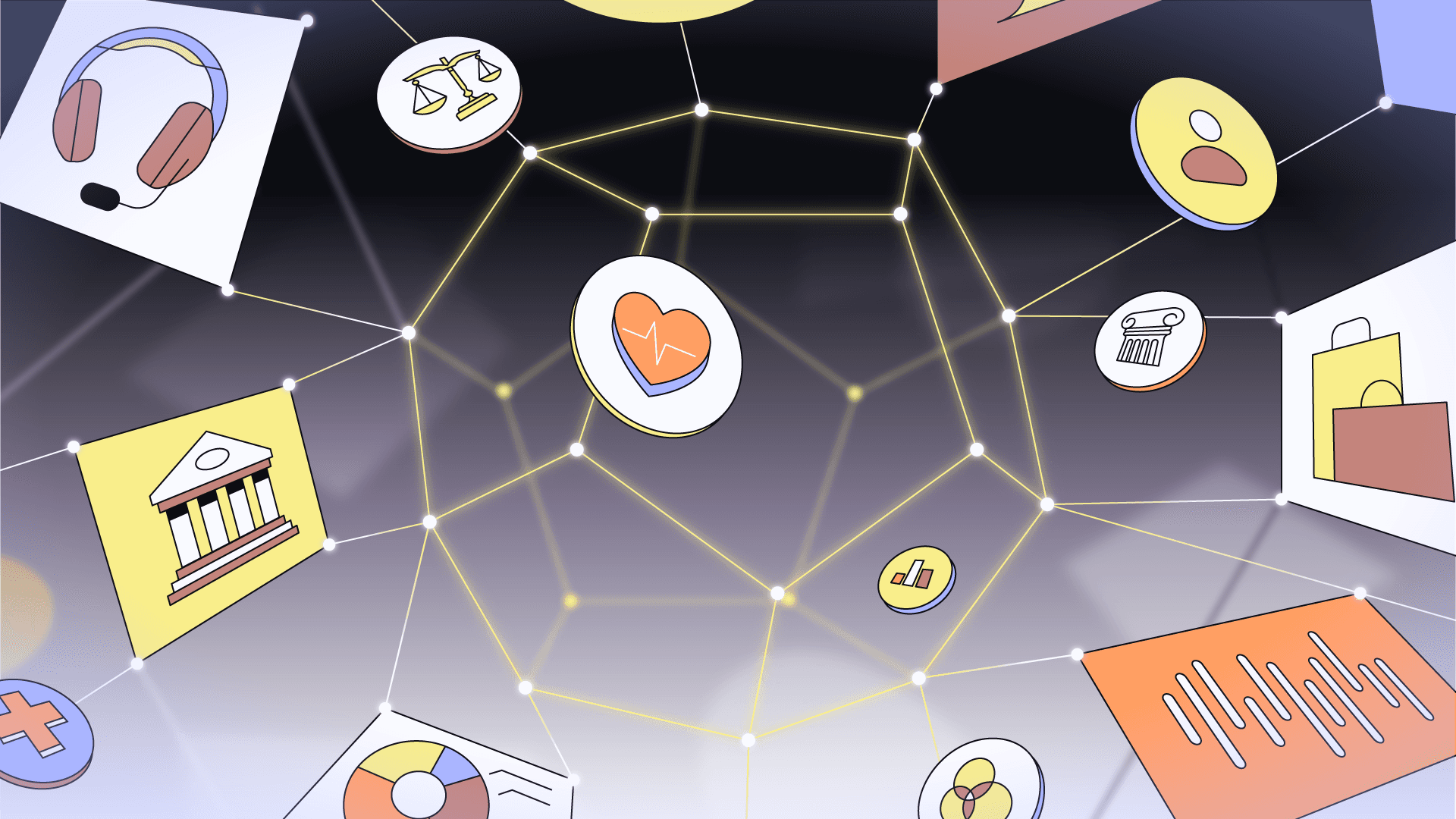

We can break RAG down into three components:

- Encoding

- Retrieval

- Generation

e need to take our “knowledge” and encode it in a way that AI models understand. That means translating the text into vectors. Vectors are mathematical representations that capture the meaning and context of words or documents in a high-dimensional space.

Many AI systems provide helpers to do this. For instance, you can use the OpenAI Embeddings API to turn any text into a vector that can be used in the retrieval process. Here is (part) of the vector for that previous paragraph:

1[-0.0068292976, 0.0373624, -0.015441199, -0.015633913, 0.04938293, -0.039434075, 0.0037699651, -0.0060042418, -0.028762545, 0.04025311, 0.0053146873, -0.0032580688, -0.019271387, -0.06441461, 0.026160909, -0.029003438, -0.0041282927, -0.0032159127, 0.027076298, 0.002509797, 0.014622165, -0.0049142037, 0.027871244, 0.012020527, 0.03143645, -0.0061186654, 0.0037157643, 0.024631241, 0.045070957, -0.00037432413, ...]

When converting an entire document or set of documents (like technical documentation) into vectors, we don’t do it all in one go. Instead, we need to preprocess the text before vectorization. The most important part of this is tokenization. That is, we need to break down our text into smaller, more manageable chunks called tokens. These could be individual words or subwords, depending on our specific approach. We can then create our vectors from these tokens.

We then store our vectors in a vector database. Vector databases are specialized storage systems designed to efficiently store, manage, and search through large collections of high-dimensional vectors. This search is critical in the next phase of RAG.

OK, we’ve tokenized and vectorized our documents. How will we use that information when a user asks a question?

That is the R of RAG: Retrieval. When a user writes a question, such as “How does Retool Vectors work?” the first step is to create a vector for that query. The premise that underlies LLMs—how they can finish your sentences—is that similar textual concepts will have similar high-dimensional vectors.

In RAG, the vector database takes the query vector. It uses similarity search to quickly find the most relevant vectors (and thus, the most relevant pieces of information) based on a given query. The vector database then returns the text that most likely contains the relevant information for that query (in this case, probably the information on this page).

Now we’ve retrieved, it’s time to augment our generation.

This is the easy part. What happens next is effectively a play on prompt engineering. Prompt engineering is what we all do when using AI-powered chatbots and conversational interfaces—we word our prompt to get the best answer. We want to give the AI as much context as possible so it gives a relevant response.

RAG just takes that a step further by including the information retrieved from our document vectors in the vector database and passing it along with the initial query to the LLM. This allows the LLM to use the most relevant information to generate the best answer.

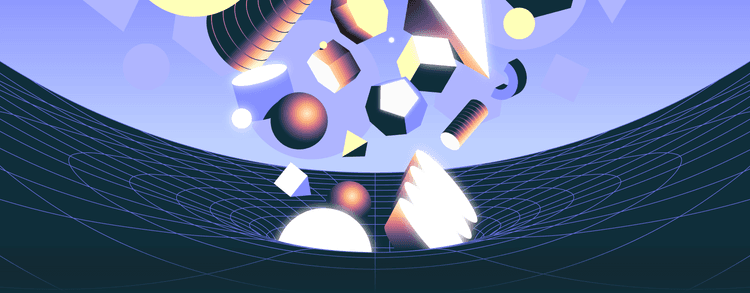

Here’s a diagram of the whole process:

- The white arrows indicate the encoding process, in which documents are converted into vectors, which are then stored in a vector database.

- The yellow arrows represent the retrieval process, in which a query is also translated into a vector, a similarity search is performed to find the most relevant information, and the query and this added context are sent to the LLM.

- The blue arrow indicates the generation phase, during which the LLM generates an up-to-date, domain-specific response with this extra context.

That is how you make your AI responses far more relevant for your users without fine-tuning your model.

When is RAG going to shine?

The most obvious is domain-specific applications. If you’re building an AI application for a specific domain, RAG can help ensure that your AI provides accurate, up-to-date, and relevant information. For example:

- Healthcare: A drug interaction checker that leverages RAG to retrieve information from a comprehensive database of medications and their potential interactions. By incorporating this knowledge into its responses, the AI can help prevent adverse drug events and improve patient safety.

- Finance: A fraud detection system that leverages RAG to retrieve information from a database of known fraud patterns and suspicious transactions. By incorporating this knowledge into its analysis, the AI can more effectively identify and flag potential fraudulent activities.

- Legal: A legal research assistant that uses RAG to access a vast repository of case law, statutes, and legal commentary. This allows the AI to provide more relevant and authoritative answers to legal questions, citing specific cases and laws to support its responses.

Conversational AIs for product users are also an excellent option for RAG, like the example above:

- Technical documentation: A programming assistant that uses RAG to access a company’s technical documentation, code snippets, and API references. This allows the AI to provide developers with more accurate and context-specific guidance, helping them troubleshoot issues and implement features more efficiently.

- Customer support: A returns and refunds assistant that leverages RAG to retrieve information from a company’s return policy, shipping records, and customer purchase history. By incorporating this knowledge into its responses, the AI can more accurately guide customers through the returns process and help resolve any issues.

- Product recommendations: A product recommendation engine that uses RAG to access a company's product catalog, customer reviews, and sales data. This allows the AI to provide more relevant and personalized product suggestions based on the user's preferences, past purchases, and current needs.

On the other hand, there are some cases where RAG may not be the best fit. Because RAG involves an additional retrieval step, it may introduce some latency compared to purely generative models, making it less “real-time.” If your application requires near-instant responses, you may need to carefully balance the benefits of RAG with the potential impact on response times.

If you’re integrating AI within your organization, retrieval-augmented generation can be an excellent opportunity to build AI apps that truly meet users’ needs. However, using the RAG technique requires a specific set of skills and domain knowledge that engineering teams might not readily possess. Retool helps ease the barrier to entry and enables teams to build RAG-powered applications through a friendly user interface and single platform.

With Retool Vectors, teams can upload unstructured text and let Retool handle the tokenization, vectorization, and vector storage. When combined with Retool AI, vectors can be queried by AI models from companies like Open AI, Azure, Anthropic, Amazon, or Cohere to give context to otherwise generic LLMs.

Using Retool’s UI building blocks, you can then seamlessly integrate AI into whatever use case–allowing you to build entire applications with RAG integrated. This empowers users to create solutions tailored to their unique needs, while maximizing the ROI on out-of-the-box LLMs.

Want to try building your own AI application with RAG? Sign up for Retool and get started today.

Reader