Enterprise AppGen is here: AI-powered app generation designed to scale: fast, secure, and production-ready from the moment you hit “build.”

People have always worked to explain tools in ways both humans and computers can understand. Communities, companies, and advocates help bridge this gap between people and machines. We’ve done ACID with database triggers and SQL, we’ve cleaned QuickBooks with SOAP, only to redefine CRUD in OpenAPI and employ well-described event-driven architecture with AsyncAPI.

But even with all these efforts to standardize, using APIs is often disappointing. We personally know the meaning of these things, we can fill in the blanks with our own know-how, should we have it.

It’s a protocol (you might even call it a kind of runtime) for hosts, servers, and clients defined by a network of overlapping data sources, service, tool and platform providers (like us!).

MCP is another way of writing metadata as a love letter to the future—our future. Together, building great solutions while guiding our helpful new AI counterparts with the meaning they need to do their jobs.

Model Context Protocol gives OpenAPI a bear hug and won’t let go. The way MCP describes web-based APIs to contact for data or to trigger state changes is the OpenAPI specification

But around those specifications, and specifications for tools that aren’t well-defined by OpenAPI, MCP provides human and LLM-readable knowledge, context, and a certain amount of nudging toward happy paths for innately non-deterministic (and imperfect!) systems. While other specification formats maybe let us convey some of this know-how and meaning before, here we expect it to be read (by humans and artificial intelligence alike) and put to use and not just run through another SDK generation grinder.

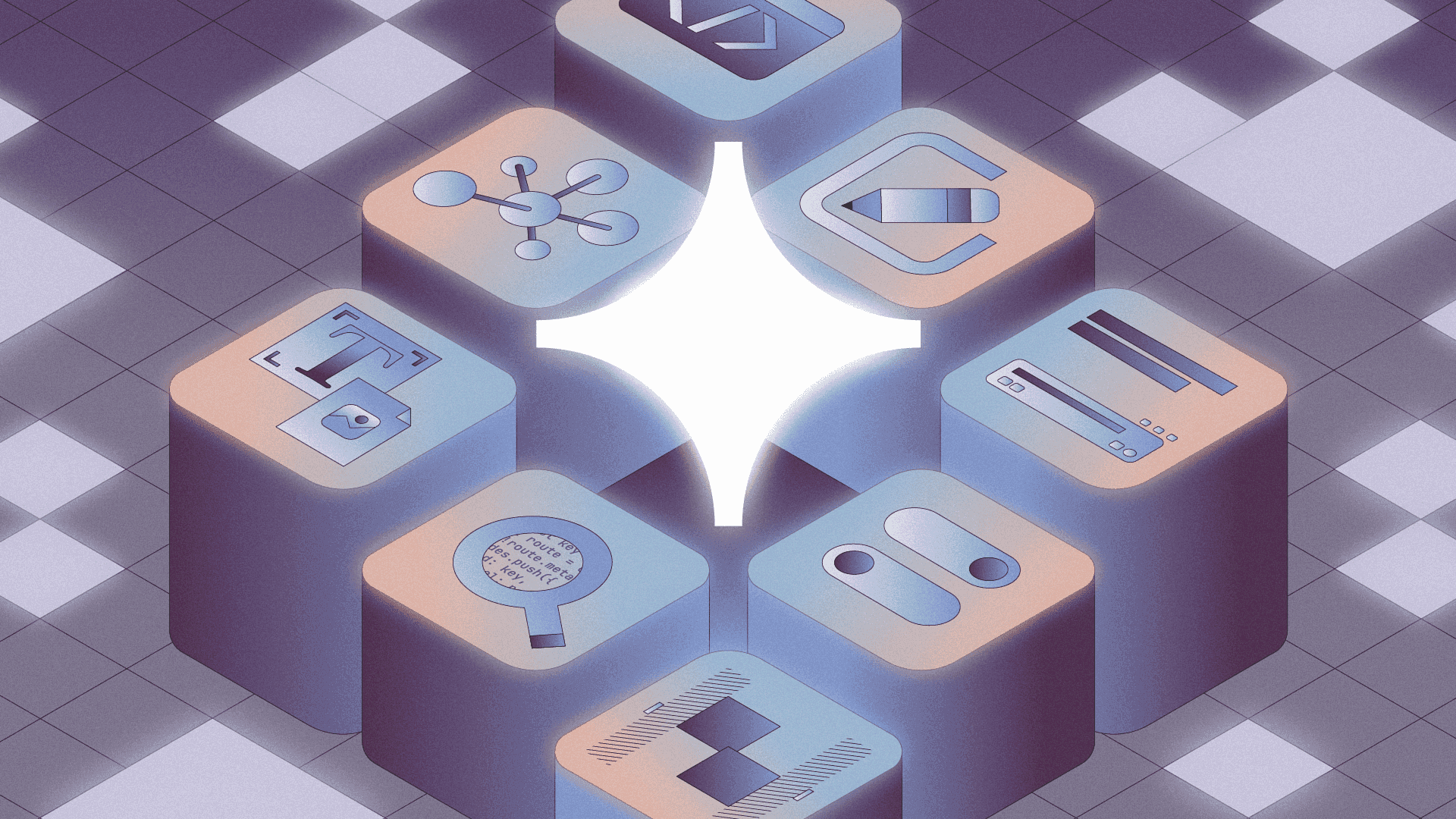

Most published or internally-relied upon API descriptions center around structured ceremonies, inputs, and outputs. They’re great at telling you what an API does, but not so great at explaining why it exists or when you should use it. You bring your own know-how to an accurately-defined but otherwise unintelligent tool.

AI systems can’t reliably decide which API to use or when without more context. And they certainly can’t choose between using a REST API or querying your database or executing a carefully crafted workflow purely on inference and intuition. AI needs help choosing which tool to use for which jobs and when

At its core, MCP standardizes how applications provide context to large language models (LLMs). Its website calls it a “USB-C port for AI applications,” which is a useful metaphor for something that connects so easily and in any direction.

MCP works behind the scenes using client-server technology, but users won’t see any of this technical setup when using the system. Here’s the basic flow:

- An AI application acts as the host. It runs an MCP client component that interacts with each tool that presents itself as an MCP server.

- The host calls the client, the client calls the tool server, receives a response, sends it back to the host.

- The client can collect all this interesting information from the MCP servers about the capabilities, format, and even prompting techniques needed to extract the most value.

MCP hosts can handle many business tasks with the right human help. These hosts need good knowledge, understanding, trust, and proper access to a defined set of tools.

These MCP servers can be handwritten by engineers, or auto-generated by SDK generators like Speakeasy, which can transform OpenAPI specifications directly into MCP-compatible servers. Your platform of choice may just handle some of those details (including all kinds of pesky authentication and access policy nuance) for you.

Think about MCP architecture like different parts working together. Many types of apps can act as the main hub that connects to all your tools (like databases, APIs, and workflows you use every day). Each tool can tell AI systems what it’s for and when it should be used. This setup makes everything work together smoothly while making sure AI understands the right time and reason to use each tool.

What makes using MCP powerful is that each tool keeps its own identity within a bigger system. This helps AI understand not just how to use the tools, but when they’re most helpful for what you’re trying to do.

But the technical implementation isn’t what makes an MCP description special.

What’s revolutionary about MCP is that it doesn’t just connect AI to data—it connects AI to meaning and intent. Traditional API descriptions tell you what an API does and what data it returns. But they don’t tell you what that data means. They don’t convey purpose. And for AI systems trying to make sense of our messy digital world, that distinction is everything. MCP bridges that gap by providing not just access to tools, but context around how and why to use them.

There’s a classic example implementation of the OpenAPI spec that documents a hypothetical REST-based API for a Pet Store’s inventory and order management. One real-world example of Model Context Protocol implementation is adding context to a particular sequence of API calls to search, select, and complete a purchase for something like your puppy pal’s favorite brand of dog food. The next time an AI agent is asked to set up a recurring order for birdseed, it’s going to pick the tool that best matches the scenario instead of re-interpreting an API spec’s intent within a vacuum every time.

Adding text fields to specifications is definitely more work. Many specs are auto-generated. But the benefits of adding information that bridges the technical to the human domain are clear:

- Descriptive metadata helps AIs and users discover resources

- Contextual metadata situates tools within broader workflows

- Purpose metadata explicitly communicates why a tool exists

- Semantic metadata provides information about the meaning of data elements

With this kind of metadata, AI models can make dramatically better decisions. They can understand when a particular tool is appropriate. They can create more effective plans by knowing the purpose of each available tool. They can reduce errors by understanding the limitations of the data they’re working with.

Model Context Protocol helps people and the systems we build choose the right tools for every job from the datastore to the pet store and beyond.

For developers, using MCP leads to better discovery and more successful usage of the tools you create. It means less time debugging weird behaviors and unfathomable choices. Users benefit from a better understanding of an intelligence’s catalog of capabilities, and the best practices and prompts to use.

When AI systems understand the meaning behind tools, they can chain them together in intelligent ways. They can become truly autonomous agents, capable of solving complex problems with a deep understanding of each tool’s purpose and relationship to the human world.

Is MCP a perfect specification? No. There’s still plenty of debate around implementation details and adoption challenges. The onus is on platform and tool providers to codify and enforce the appropriate permissions within a domain. As with all things AI, trust comes first.

But MCP is absolutely a step in the right direction toward a future with self-selecting interoperable, even intelligent tools.

Ultimately, we want AI systems that don’t just access and retrieve data. We want AI systems that understand what each datum means. We want to build AI apps and systems that grasp the why, not just the what.

Special thanks to Mathew Pregasen for his help with this article.

Reader